Fine-Tune

Fine-tune is a common task in machine learning, which is to train a model on a specific dataset to improve its performance. In Lepton, we provide an easy-to-use fine-tune feature to help you fine-tune a model on a specific dataset.

This page will go through the steps for how to fine-tune a model in Lepton.

Fine-Tune a Model

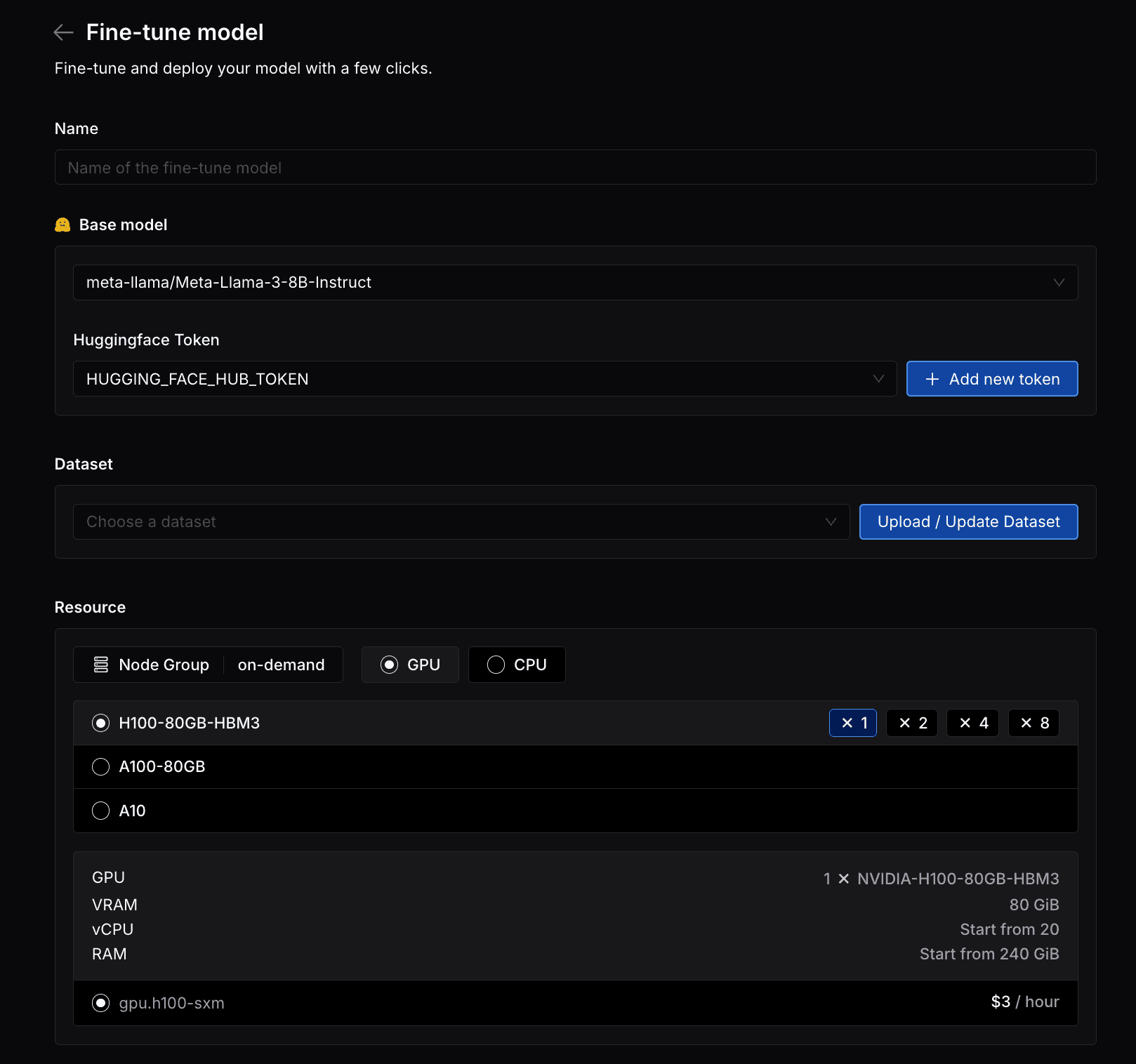

Navigate to the Fine-Tune page, and you can see a form to create a fine-tune job.

Specify Base model

First of all, you need to select a base model to fine-tune. Currently, we support fine-tuning models from Hugging Face, and you can select a model from the dropdown list.

Some models are gated by HuggingFace, you need to provide your HuggingFace token to use these models.

Upload Your Dataset

You can upload your own dataset to Lepton file system by clicking the Upload / Update Dataset button.

The json file should match the following format, which is an array of messages, each message is a conversation history composed of a role and content. You can also check and download the example json data in the upload modal for reference.

[

{

"messages": [

{

"role": "user",

"content": "Hello, how are you?"

},

{

"role": "assistant",

"content": "I'm fine, thank you!"

}

]

},

{

"messages": [

{

"role": "user",

"content": "What is the weather in Tokyo?"

},

{

"role": "assistant",

"content": "The weather in Tokyo is sunny."

}

]

}

]

Choose Resource

You can select the resource shape for your fine-tune job, which will determine the instance type and the number of GPUs you will use. Simply choose the node group and the type of GPU/CPU nodes that fit your needs.

Choose Tuning Method

You can also specify the tuning method.

Currently, we support three tuning methods: LoRA, Medusa and Full Fine-Tuning.

Select the method you want to use, and you can see the corresponding parameters you need to configure.

Advanced configuration

In the advanced configuration section, you can specify some more details for your fine-tune job.

- Learning Rate: Specifies the learning rate for training, defaults to 0.0005.

- Training Epochs: Number of training epochs, defaults to 10.

- Training Batch Size: Training batch size per device (GPU or CPU), defaults to 32.

- Gradient Accumulation Steps: Number of gradient accumulation steps, defaults to 1.

- Warmup Ratio: Specifies the warmup ratio for learning rate scheduling, defaults to 0.10.

- Early Stop Threshold: Stops training early if the reduction in validation loss is less than this threshold, defaults to 0.01.

Create the Fine-Tune Job

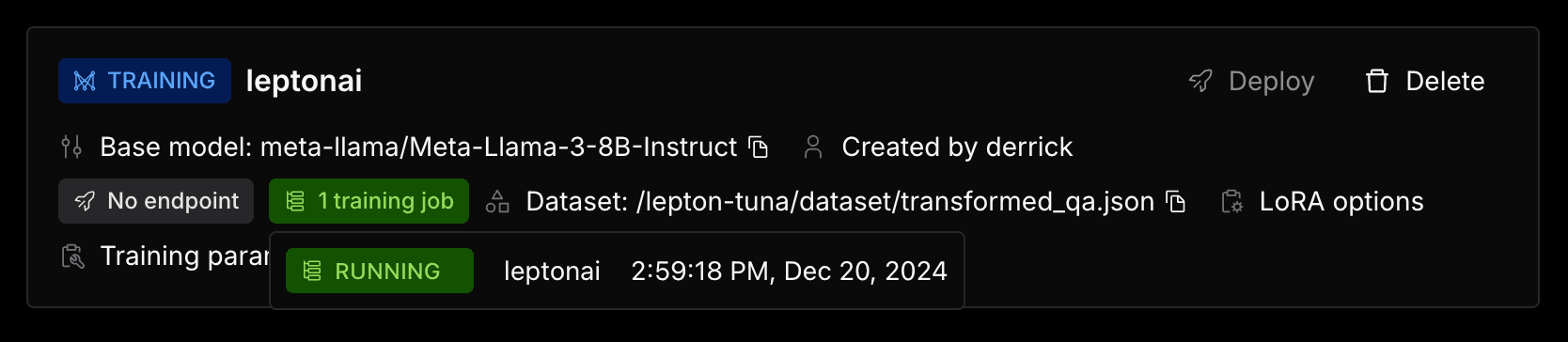

After you've setup the configuration, you can click the Create button to create the fine-tune job.

You can track the job status and progress on the Fine-Tune page.

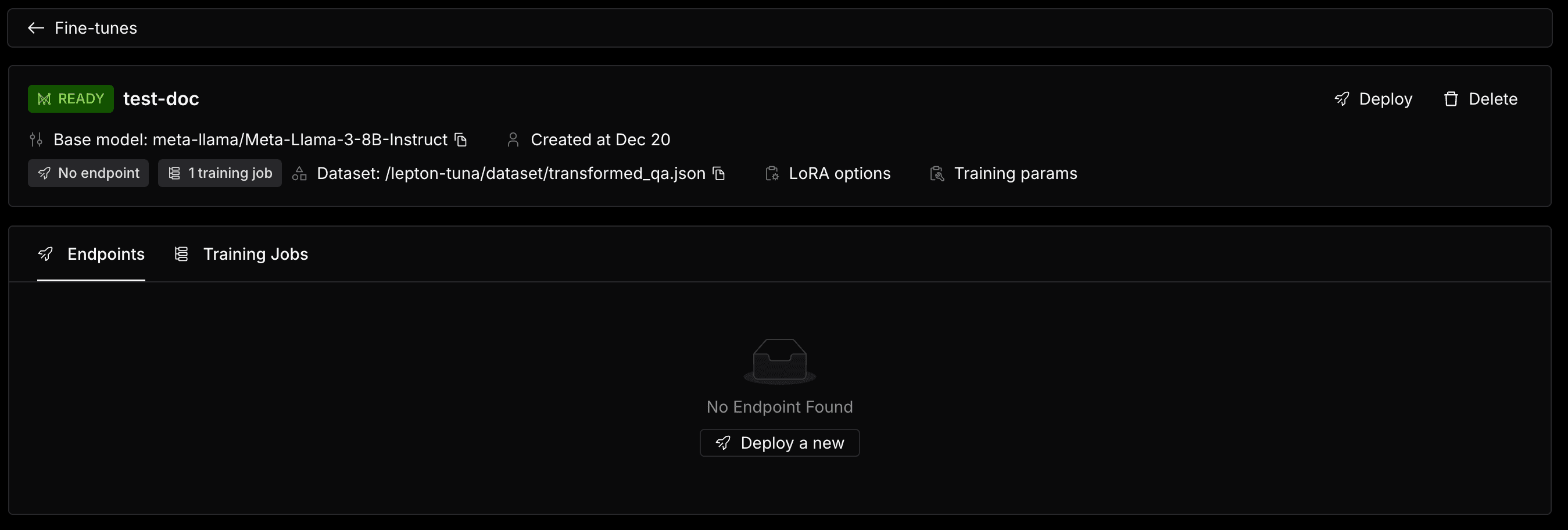

Deploy the Fine-Tune Model

Once the fine-tune job is ready, you can deploy the fine-tune model by clicking the Deploy button.

Click on the Deploy a new button, and then you can create a dedicated endpoint for your fine-tune model. For more configuration options for a dedicated endpoint, you can refer to this page.

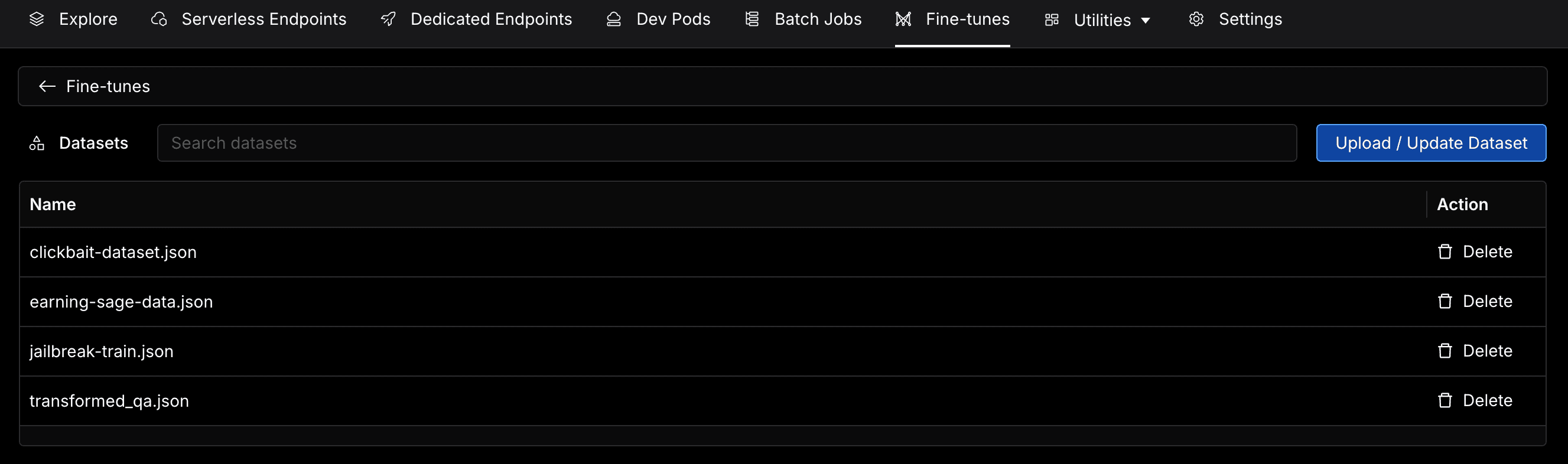

Manage Datasets

You can also manage your datasets on the Datasets page.