Storage

Storage is a core component of Lepton, providing essential services for data management and processing. It includes the following services:

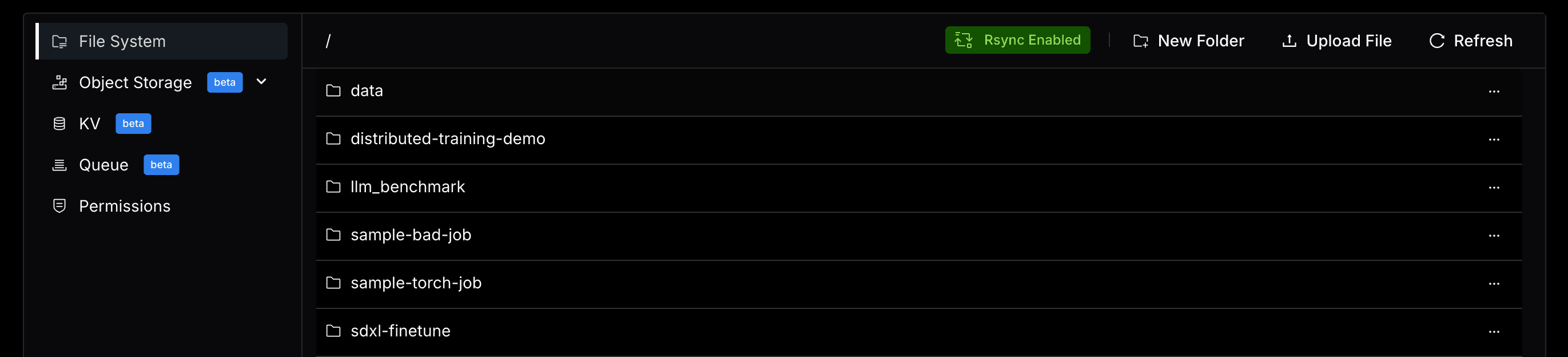

- File System is a persistent remote storage service that offers POSIX filesystem semantics.

- Object Storage provides a simple way to transmit small files between deployments, and between the deployment and the client.

- Key-Value Store is a fast and scalable storage service that can be used to store and retrieve key-value pairs.

- Queue provides a flexible, reliable means of managing a stream of data within your application.

- Permissions are managed at the user level, allowing fine-grained access control to specific paths within the file system.